import xgboost as xgb

from xgboost import plot_importance

import pandas as pd

import numpy as np

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.metrics import confusion_matrix, accuracy_score

from sklearn.metrics import precision_score, recall_score

from sklearn.metrics import f1_score, roc_auc_score

from xgboost import XGBClassifier

import warnings

warnings.filterwarnings('ignore')

dataset = load_breast_cancer()

X_features= dataset.data

y_label = dataset.target

cancer_df = pd.DataFrame(data=X_features, columns=dataset.feature_names)

cancer_df['target']= y_label

cancer_df.head(3)

Out[28]:

mean radiusmean texturemean perimetermean areamean smoothnessmean compactnessmean concavitymean concave pointsmean symmetrymean fractal dimension...worst textureworst perimeterworst areaworst smoothnessworst compactnessworst concavityworst concave pointsworst symmetryworst fractal dimensiontarget012

| 17.99 | 10.38 | 122.8 | 1001.0 | 0.11840 | 0.27760 | 0.3001 | 0.14710 | 0.2419 | 0.07871 | ... | 17.33 | 184.6 | 2019.0 | 0.1622 | 0.6656 | 0.7119 | 0.2654 | 0.4601 | 0.11890 | 0 |

| 20.57 | 17.77 | 132.9 | 1326.0 | 0.08474 | 0.07864 | 0.0869 | 0.07017 | 0.1812 | 0.05667 | ... | 23.41 | 158.8 | 1956.0 | 0.1238 | 0.1866 | 0.2416 | 0.1860 | 0.2750 | 0.08902 | 0 |

| 19.69 | 21.25 | 130.0 | 1203.0 | 0.10960 | 0.15990 | 0.1974 | 0.12790 | 0.2069 | 0.05999 | ... | 25.53 | 152.5 | 1709.0 | 0.1444 | 0.4245 | 0.4504 | 0.2430 | 0.3613 | 0.08758 | 0 |

3 rows × 31 columns

In [11]:

print(dataset.target_names)

print(cancer_df['target'].value_counts())

['malignant' 'benign']

1 357

0 212

Name: target, dtype: int64

In [29]:

# 전체 데이터 중 80%는 학습용 데이터, 20%는 테스트용 데이터 추출

X_train, X_test, y_train, y_test=train_test_split(X_features, y_label,

test_size=0.2, random_state=156 )

print(X_train.shape , X_test.shape)

(455, 30) (114, 30)

In [30]:

xgb_clt=XGBClassifier(n_estimators = 400, learning_rate=0.1, max_depth = 3, )

xgb_clt.fit(X_train, y_train)

pred = xgb_clt.predict(X_test)

pred_proba=xgb_clt.predict_proba(X_test)

[13:48:47] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.5.1/src/learner.cc:1115: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

In [31]:

# 수정된 get_clf_eval() 함수

from sklearn.metrics import roc_curve

import matplotlib.pyplot as plt

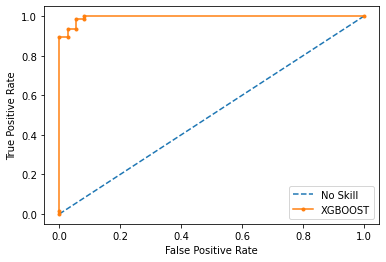

def get_clf_eval(y_test, pred):

confusion = confusion_matrix( y_test, pred)

accuracy = accuracy_score(y_test , pred)

precision = precision_score(y_test , pred)

recall = recall_score(y_test , pred)

f1 = f1_score(y_test,pred)

# ROC-AUC 추가

roc_auc = roc_auc_score(y_test, pred)

lr_probs=pred_proba[:,1]

ns_probs = [0 for _ in range(len(y_test))]

ns_fpr, ns_tpr, _ = roc_curve(y_test, ns_probs)

lr_fpr, lr_tpr, _ = roc_curve(y_test, lr_probs)

plt.plot(ns_fpr, ns_tpr, linestyle="--", label ="No Skill")

plt.plot(lr_fpr, lr_tpr, marker=".", label="XGBOOST")

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.legend()

plt.show()

print('오차 행렬')

print(confusion)

# ROC-AUC print 추가

print('정확도: {0:.4f}, 정밀도: {1:.4f}, 재현율: {2:.4f},\

F1: {3:.4f}, AUC:{4:.4f}'.format(accuracy, precision, recall, f1, roc_auc))

In [32]:

get_clf_eval(y_test, pred )

오차 행렬

[[35 2]

[ 1 76]]

정확도: 0.9737, 정밀도: 0.9744, 재현율: 0.9870, F1: 0.9806, AUC:0.9665

In [33]:

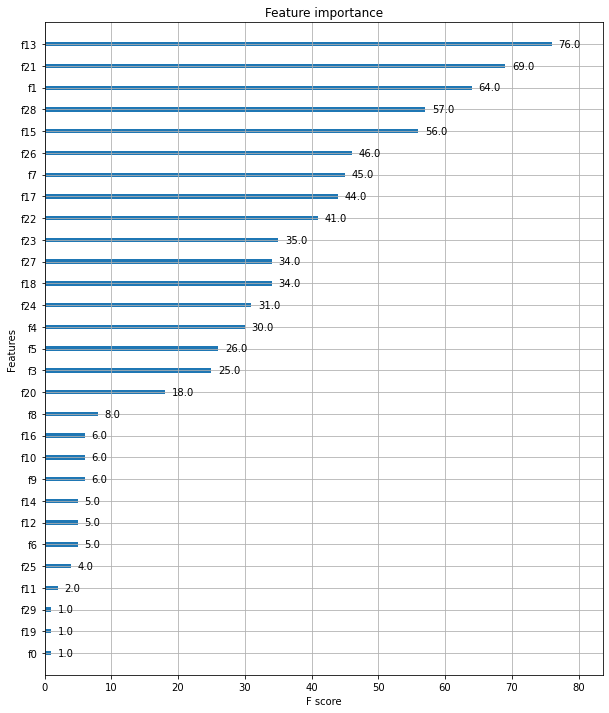

fig, ax = plt.subplots(figsize=(10,12))

plot_importance(xgb_clt, ax=ax)

Out[33]:

<AxesSubplot:title={'center':'Feature importance'}, xlabel='F score', ylabel='Features'>

In [43]:

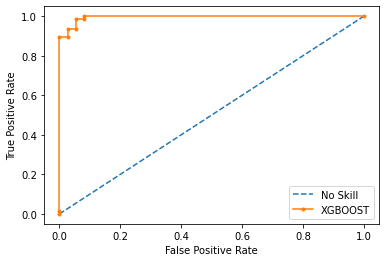

xgb_clt=XGBClassifier(n_estimators = 400, learning_rate=0.1, max_depth = 3, )

xgb_clt.fit(X_train, y_train, early_stopping_rounds=200, eval_metric='logloss', eval_set=[(X_test,y_test)], verbose=True) # 400번 진행중 100번 동안 반응없으면 끝내기

pred = xgb_clt.predict(X_test)

pred_proba=xgb_clt.predict_proba(X_test)

ns_probs = [0 for _ in range(len(y_test))]

ns_fpr, ns_tpr, _ = roc_curve(y_test, ns_probs)

[0] validation_0-logloss:0.61352

[1] validation_0-logloss:0.54784

[2] validation_0-logloss:0.49425

[3] validation_0-logloss:0.44799

[4] validation_0-logloss:0.40911

[5] validation_0-logloss:0.37498

[6] validation_0-logloss:0.34571

[7] validation_0-logloss:0.32053

[8] validation_0-logloss:0.29721

[9] validation_0-logloss:0.27799

[10] validation_0-logloss:0.26030

[11] validation_0-logloss:0.24604

[12] validation_0-logloss:0.23156

[13] validation_0-logloss:0.22005

[14] validation_0-logloss:0.20857

[15] validation_0-logloss:0.19999

[16] validation_0-logloss:0.19012

[17] validation_0-logloss:0.18182

[18] validation_0-logloss:0.17473

[19] validation_0-logloss:0.16766

[20] validation_0-logloss:0.15820

[21] validation_0-logloss:0.15473

[22] validation_0-logloss:0.14895

[23] validation_0-logloss:0.14331

[24] validation_0-logloss:0.13634

[25] validation_0-logloss:0.13278

[26] validation_0-logloss:0.12791

[27] validation_0-logloss:0.12526

[28] validation_0-logloss:0.11998

[29] validation_0-logloss:0.11641

[30] validation_0-logloss:0.11450

[31] validation_0-logloss:0.11257

[32] validation_0-logloss:0.11154

[33] validation_0-logloss:0.10868

[34] validation_0-logloss:0.10668

[35] validation_0-logloss:0.10421

[36] validation_0-logloss:0.10296

[37] validation_0-logloss:0.10058

[38] validation_0-logloss:0.09868

[39] validation_0-logloss:0.09644

[40] validation_0-logloss:0.09587

[41] validation_0-logloss:0.09424

[42] validation_0-logloss:0.09471

[43] validation_0-logloss:0.09427

[44] validation_0-logloss:0.09389

[45] validation_0-logloss:0.09418

[46] validation_0-logloss:0.09402

[47] validation_0-logloss:0.09236

[48] validation_0-logloss:0.09301

[49] validation_0-logloss:0.09127

[50] validation_0-logloss:0.09005

[51] validation_0-logloss:0.08961

[52] validation_0-logloss:0.08958

[53] validation_0-logloss:0.09070

[54] validation_0-logloss:0.08958

[55] validation_0-logloss:0.09036

[56] validation_0-logloss:0.09159

[57] validation_0-logloss:0.09153

[58] validation_0-logloss:0.09199

[59] validation_0-logloss:0.09195

[60] validation_0-logloss:0.09194

[61] validation_0-logloss:0.09146

[62] validation_0-logloss:0.09031

[63] validation_0-logloss:0.08941

[64] validation_0-logloss:0.08972

[65] validation_0-logloss:0.08974

[66] validation_0-logloss:0.08962

[67] validation_0-logloss:0.08873

[68] validation_0-logloss:0.08862

[69] validation_0-logloss:0.08974

[70] validation_0-logloss:0.08998

[71] validation_0-logloss:0.08978

[72] validation_0-logloss:0.08958

[73] validation_0-logloss:0.08953

[74] validation_0-logloss:0.08875

[75] validation_0-logloss:0.08860

[76] validation_0-logloss:0.08812

[77] validation_0-logloss:0.08840

[78] validation_0-logloss:0.08874

[79] validation_0-logloss:0.08815

[80] validation_0-logloss:0.08758

[81] validation_0-logloss:0.08741

[82] validation_0-logloss:0.08849

[83] validation_0-logloss:0.08858

[84] validation_0-logloss:0.08807

[85] validation_0-logloss:0.08764

[86] validation_0-logloss:0.08742

[87] validation_0-logloss:0.08761

[88] validation_0-logloss:0.08707

[89] validation_0-logloss:0.08727

[90] validation_0-logloss:0.08716

[91] validation_0-logloss:0.08696

[92] validation_0-logloss:0.08717

[93] validation_0-logloss:0.08707

[94] validation_0-logloss:0.08659

[95] validation_0-logloss:0.08612

[96] validation_0-logloss:0.08714

[97] validation_0-logloss:0.08677

[98] validation_0-logloss:0.08669

[99] validation_0-logloss:0.08655

[100] validation_0-logloss:0.08650

[101] validation_0-logloss:0.08641

[102] validation_0-logloss:0.08629

[103] validation_0-logloss:0.08626

[104] validation_0-logloss:0.08683

[105] validation_0-logloss:0.08677

[106] validation_0-logloss:0.08732

[107] validation_0-logloss:0.08730

[108] validation_0-logloss:0.08728

[109] validation_0-logloss:0.08730

[110] validation_0-logloss:0.08729

[111] validation_0-logloss:0.08800

[112] validation_0-logloss:0.08794

[113] validation_0-logloss:0.08784

[114] validation_0-logloss:0.08807

[115] validation_0-logloss:0.08765

[116] validation_0-logloss:0.08730

[117] validation_0-logloss:0.08780

[118] validation_0-logloss:0.08775

[119] validation_0-logloss:0.08768

[120] validation_0-logloss:0.08763

[121] validation_0-logloss:0.08757

[122] validation_0-logloss:0.08755

[123] validation_0-logloss:0.08716

[124] validation_0-logloss:0.08767

[125] validation_0-logloss:0.08774

[126] validation_0-logloss:0.08828

[127] validation_0-logloss:0.08831

[128] validation_0-logloss:0.08827

[129] validation_0-logloss:0.08789

[130] validation_0-logloss:0.08886

[131] validation_0-logloss:0.08868

[132] validation_0-logloss:0.08874

[133] validation_0-logloss:0.08922

[134] validation_0-logloss:0.08918

[135] validation_0-logloss:0.08882

[136] validation_0-logloss:0.08851

[137] validation_0-logloss:0.08848

[138] validation_0-logloss:0.08839

[139] validation_0-logloss:0.08915

[140] validation_0-logloss:0.08911

[141] validation_0-logloss:0.08876

[142] validation_0-logloss:0.08868

[143] validation_0-logloss:0.08839

[144] validation_0-logloss:0.08927

[145] validation_0-logloss:0.08924

[146] validation_0-logloss:0.08914

[147] validation_0-logloss:0.08891

[148] validation_0-logloss:0.08942

[149] validation_0-logloss:0.08939

[150] validation_0-logloss:0.08911

[151] validation_0-logloss:0.08873

[152] validation_0-logloss:0.08872

[153] validation_0-logloss:0.08848

[154] validation_0-logloss:0.08847

[155] validation_0-logloss:0.08854

[156] validation_0-logloss:0.08852

[157] validation_0-logloss:0.08855

[158] validation_0-logloss:0.08828

[159] validation_0-logloss:0.08830

[160] validation_0-logloss:0.08828

[161] validation_0-logloss:0.08801

[162] validation_0-logloss:0.08776

[163] validation_0-logloss:0.08778

[164] validation_0-logloss:0.08778

[165] validation_0-logloss:0.08752

[166] validation_0-logloss:0.08754

[167] validation_0-logloss:0.08764

[168] validation_0-logloss:0.08739

[169] validation_0-logloss:0.08738

[170] validation_0-logloss:0.08730

[171] validation_0-logloss:0.08737

[172] validation_0-logloss:0.08740

[173] validation_0-logloss:0.08739

[174] validation_0-logloss:0.08713

[175] validation_0-logloss:0.08716

[176] validation_0-logloss:0.08696

[177] validation_0-logloss:0.08705

[178] validation_0-logloss:0.08697

[179] validation_0-logloss:0.08697

[180] validation_0-logloss:0.08704

[181] validation_0-logloss:0.08680

[182] validation_0-logloss:0.08683

[183] validation_0-logloss:0.08658

[184] validation_0-logloss:0.08659

[185] validation_0-logloss:0.08661

[186] validation_0-logloss:0.08637

[187] validation_0-logloss:0.08637

[188] validation_0-logloss:0.08630

[189] validation_0-logloss:0.08610

[190] validation_0-logloss:0.08602

[191] validation_0-logloss:0.08605

[192] validation_0-logloss:0.08615

[193] validation_0-logloss:0.08592

[194] validation_0-logloss:0.08592

[195] validation_0-logloss:0.08598

[196] validation_0-logloss:0.08601

[197] validation_0-logloss:0.08592

[198] validation_0-logloss:0.08585

[199] validation_0-logloss:0.08587

[200] validation_0-logloss:0.08589

[201] validation_0-logloss:0.08595

[202] validation_0-logloss:0.08573

[203] validation_0-logloss:0.08573

[204] validation_0-logloss:0.08575

[205] validation_0-logloss:0.08582

[206] validation_0-logloss:0.08584

[207] validation_0-logloss:0.08578

[208] validation_0-logloss:0.08569

[209] validation_0-logloss:0.08571

[210] validation_0-logloss:0.08581

[211] validation_0-logloss:0.08559

[212] validation_0-logloss:0.08580

[213] validation_0-logloss:0.08581

[214] validation_0-logloss:0.08574

[215] validation_0-logloss:0.08566

[216] validation_0-logloss:0.08584

[217] validation_0-logloss:0.08563

[218] validation_0-logloss:0.08573

[219] validation_0-logloss:0.08578

[220] validation_0-logloss:0.08579

[221] validation_0-logloss:0.08582

[222] validation_0-logloss:0.08576

[223] validation_0-logloss:0.08567

[224] validation_0-logloss:0.08586

[225] validation_0-logloss:0.08587

[226] validation_0-logloss:0.08593

[227] validation_0-logloss:0.08595

[228] validation_0-logloss:0.08587

[229] validation_0-logloss:0.08606

[230] validation_0-logloss:0.08600

[231] validation_0-logloss:0.08592

[232] validation_0-logloss:0.08610

[233] validation_0-logloss:0.08611

[234] validation_0-logloss:0.08617

[235] validation_0-logloss:0.08626

[236] validation_0-logloss:0.08629

[237] validation_0-logloss:0.08622

[238] validation_0-logloss:0.08639

[239] validation_0-logloss:0.08634

[240] validation_0-logloss:0.08618

[241] validation_0-logloss:0.08619

[242] validation_0-logloss:0.08625

[243] validation_0-logloss:0.08626

[244] validation_0-logloss:0.08629

[245] validation_0-logloss:0.08622

[246] validation_0-logloss:0.08640

[247] validation_0-logloss:0.08635

[248] validation_0-logloss:0.08628

[249] validation_0-logloss:0.08645

[250] validation_0-logloss:0.08629

[251] validation_0-logloss:0.08631

[252] validation_0-logloss:0.08636

[253] validation_0-logloss:0.08639

[254] validation_0-logloss:0.08649

[255] validation_0-logloss:0.08644

[256] validation_0-logloss:0.08629

[257] validation_0-logloss:0.08646

[258] validation_0-logloss:0.08639

[259] validation_0-logloss:0.08644

[260] validation_0-logloss:0.08646

[261] validation_0-logloss:0.08649

[262] validation_0-logloss:0.08644

[263] validation_0-logloss:0.08647

[264] validation_0-logloss:0.08632

[265] validation_0-logloss:0.08649

[266] validation_0-logloss:0.08654

[267] validation_0-logloss:0.08647

[268] validation_0-logloss:0.08650

[269] validation_0-logloss:0.08652

[270] validation_0-logloss:0.08669

[271] validation_0-logloss:0.08674

[272] validation_0-logloss:0.08683

[273] validation_0-logloss:0.08668

[274] validation_0-logloss:0.08664

[275] validation_0-logloss:0.08650

[276] validation_0-logloss:0.08636

[277] validation_0-logloss:0.08652

[278] validation_0-logloss:0.08657

[279] validation_0-logloss:0.08659

[280] validation_0-logloss:0.08668

[281] validation_0-logloss:0.08664

[282] validation_0-logloss:0.08650

[283] validation_0-logloss:0.08636

[284] validation_0-logloss:0.08640

[285] validation_0-logloss:0.08643

[286] validation_0-logloss:0.08646

[287] validation_0-logloss:0.08650

[288] validation_0-logloss:0.08637

[289] validation_0-logloss:0.08646

[290] validation_0-logloss:0.08645

[291] validation_0-logloss:0.08632

[292] validation_0-logloss:0.08628

[293] validation_0-logloss:0.08615

[294] validation_0-logloss:0.08620

[295] validation_0-logloss:0.08622

[296] validation_0-logloss:0.08631

[297] validation_0-logloss:0.08618

[298] validation_0-logloss:0.08626

[299] validation_0-logloss:0.08613

[300] validation_0-logloss:0.08618

[301] validation_0-logloss:0.08605

[302] validation_0-logloss:0.08602

[303] validation_0-logloss:0.08610

[304] validation_0-logloss:0.08598

[305] validation_0-logloss:0.08606

[306] validation_0-logloss:0.08597

[307] validation_0-logloss:0.08600

[308] validation_0-logloss:0.08600

[309] validation_0-logloss:0.08588

[310] validation_0-logloss:0.08592

[311] validation_0-logloss:0.08595

[312] validation_0-logloss:0.08603

[313] validation_0-logloss:0.08611

[314] validation_0-logloss:0.08599

[315] validation_0-logloss:0.08590

[316] validation_0-logloss:0.08595

[317] validation_0-logloss:0.08598

[318] validation_0-logloss:0.08600

[319] validation_0-logloss:0.08588

[320] validation_0-logloss:0.08597

[321] validation_0-logloss:0.08605

[322] validation_0-logloss:0.08609

[323] validation_0-logloss:0.08598

[324] validation_0-logloss:0.08598

[325] validation_0-logloss:0.08590

[326] validation_0-logloss:0.08578

[327] validation_0-logloss:0.08586

[328] validation_0-logloss:0.08594

[329] validation_0-logloss:0.08582

[330] validation_0-logloss:0.08587

[331] validation_0-logloss:0.08589

[332] validation_0-logloss:0.08592

[333] validation_0-logloss:0.08584

[334] validation_0-logloss:0.08574

[335] validation_0-logloss:0.08582

[336] validation_0-logloss:0.08589

[337] validation_0-logloss:0.08594

[338] validation_0-logloss:0.08583

[339] validation_0-logloss:0.08591

[340] validation_0-logloss:0.08583

[341] validation_0-logloss:0.08573

[342] validation_0-logloss:0.08568

[343] validation_0-logloss:0.08572

[344] validation_0-logloss:0.08580

[345] validation_0-logloss:0.08582

[346] validation_0-logloss:0.08571

[347] validation_0-logloss:0.08579

[348] validation_0-logloss:0.08583

[349] validation_0-logloss:0.08573

[350] validation_0-logloss:0.08566

[351] validation_0-logloss:0.08573

[352] validation_0-logloss:0.08581

[353] validation_0-logloss:0.08571

[354] validation_0-logloss:0.08566

[355] validation_0-logloss:0.08570

[356] validation_0-logloss:0.08563

[357] validation_0-logloss:0.08553

[358] validation_0-logloss:0.08560

[359] validation_0-logloss:0.08568

[360] validation_0-logloss:0.08558

[361] validation_0-logloss:0.08560

[362] validation_0-logloss:0.08564

[363] validation_0-logloss:0.08571

[364] validation_0-logloss:0.08579

[365] validation_0-logloss:0.08569

[366] validation_0-logloss:0.08573

[367] validation_0-logloss:0.08568

[368] validation_0-logloss:0.08559

[369] validation_0-logloss:0.08552

[370] validation_0-logloss:0.08559

[371] validation_0-logloss:0.08550

[372] validation_0-logloss:0.08556

[373] validation_0-logloss:0.08561

[374] validation_0-logloss:0.08563

[375] validation_0-logloss:0.08553

[376] validation_0-logloss:0.08561

[377] validation_0-logloss:0.08567

[378] validation_0-logloss:0.08571

[379] validation_0-logloss:0.08562

[380] validation_0-logloss:0.08558

[381] validation_0-logloss:0.08562

[382] validation_0-logloss:0.08564

[383] validation_0-logloss:0.08555

[384] validation_0-logloss:0.08562

[385] validation_0-logloss:0.08562

[386] validation_0-logloss:0.08555

[387] validation_0-logloss:0.08546

[388] validation_0-logloss:0.08550

[389] validation_0-logloss:0.08546

[390] validation_0-logloss:0.08532

[391] validation_0-logloss:0.08539

[392] validation_0-logloss:0.08530

[393] validation_0-logloss:0.08537

[394] validation_0-logloss:0.08530

[395] validation_0-logloss:0.08537

[396] validation_0-logloss:0.08528

[397] validation_0-logloss:0.08532

[398] validation_0-logloss:0.08528

[399] validation_0-logloss:0.08520

In [44]:

get_clf_eval(y_test, pred )

오차 행렬

[[35 2]

[ 1 76]]

정확도: 0.9737, 정밀도: 0.9744, 재현율: 0.9870, F1: 0.9806, AUC:0.9665

In [ ]:

728x90

'머신러닝' 카테고리의 다른 글

| 회귀- 경사 하강법 (0) | 2022.04.15 |

|---|---|

| 머신 러닝-k-Nearest Neighbor(kNN) (0) | 2022.04.14 |

| 머신 러닝 -분류 학습(Ensemble Learning) (0) | 2022.04.13 |

| 타이타닉 생존자 분류 예측 (0) | 2022.04.13 |

| 머신 러닝 분류 알고리즘-DecisionTree (0) | 2022.04.12 |